Apr 4

Improve ChatGPT Performance with Prompt Engineering

How to Ask Questions to ChatGPT to Maximize the Chances of a Successful Answer

ChatGPT generates responses using a technique called autoregression, which involves predicting the most likely next word in a sequence based on the previous words. But, if you try ChatGPT, you will soon realize that the quality of a given answer also depends on the quality of the question.

The secret for getting back the best possible answer is to understand how ChatGPT will generate it, and formulate the question accordingly.

In this article, we will discuss a few tricks to write good ChatGPT prompts so that you can maximize its outcome for your desired task.

⚠️ Terminology Recap: The technical name for a question is prompt, and putting some thoughts on the question we ask to maximize ChatGPT’s outcome is known as prompt engineering.

Ciphers Lab is a USA-based Digital Product Studio, that provides services like Web App design & development, Mobile Application design & development, UI/UX, Chatbot development, E-commerce development, Designing & Branding, Social Media Marketing, and virtual CTO. For further information. get connected now

Model Capabilities Depend on the Context

When starting with ChatGPT, one common mistake is to believe that its capabilities are fixed across all contexts.

For example, if ChatGPT is capable of successfully answer a concrete question or do a certain task, we may think it might be capable of answering also a question in any domain, or to solve other types of tasks.

But that is not true :(

📚 ChatGPT has been trained on a huge but yet limited database, and it has been optimized for certain tasks.

Nevertheless, using the right prompts can help ChatGPT to find the correct answer in a concrete unseen domain or to master a new task.

Model Capabilities Also Depend on the Timing

It can also happen that ChatGPT gives wrong answers when solving complex tasks.

As in humans, if you give a person a sum of 4 digits, they will need some time for thinking and answering back with the correct answer. If they do it in a hurry, they could easily miscalculate. Similarly, if you give ChatGPT a task that’s too complex to do in the time it takes to calculate its next token, it may confabulate an incorrect guess.

🧠 Yet, akin to humans, that doesn’t necessarily mean the model is incapable of the task. With some time to reason things out, the model still may be able to answer reliably.

There are ways to guide ChatGPT to succeed in solving your complex tasks.

And now it is time! Let’s explore those techniques!

Zero-shot prompting

People use zero-shot promping most of the time when using ChatGPT. We call it zero-shot when the person asks a question straight away.

In those cases, and if the question is simple enough, the model will be able to provide a coherent response. But with this method the user cannot control the accuracy of the response or the format.

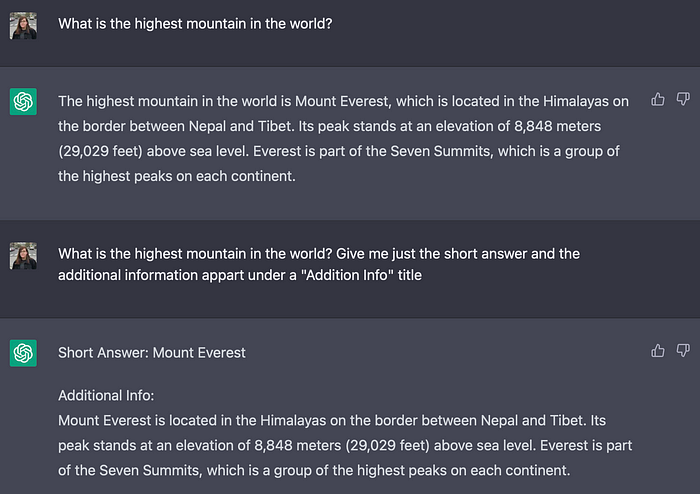

Here are a few examples of zero-shot prompting in ChatGPT:

In these examples, the prompt consists of a simple question or statement, and ChatGPT provides the correct answer even without any prior training on the specific task or topic.

🙌🏼 This demonstrates the power of zero-shot prompting and the ability of large language models like ChatGPT to generate human-like answers and eventually generalize to new tasks and domains.

Improve your Zero-Shot

There are a few tricks to write good prompts while using zero-shot promping, such as giving clearer instructions or splitting complex tasks into simpler subtasks. Nevertheless, this tricks can be a bit void without concrete examples or knowing the theory behind.

There is a good standard sentence to include in your prompts no matter which complex task are you asking ChatGPT to solve:

Let's think step by step

The “Let’s Think” trick

Published by Takeshi Kojima et al. in 2022, the easiest way to prompt a model to reason out the answer is to simply prepend answers with Let's think step by step.

This simple statement can help the model steer itself toward the correct output. Since it will prompt the reasoning step by step, it is also a way to give the model the required timing to generate the correct answer.

The Let's think step by step trick works well on complex tasks such as math problems or questions requiring some reasoning, but also in general questions:

As we can see, ChatGPT only provides the most updated answer when using the Let's think step by step statement.

💭 If you apply this technique to your own tasks, don’t be afraid to experiment with customizing the instruction! Let's think step by step

is rather generic, so you may find better performance with instructions

that how to a stricter format customized to your use case.

For example, you can try more structured variants like First,

think step by step about why X might be true. Second, think step by

step about why Y might be true. Third, think step by step about whether X

or Y makes more sense which can also give notable improvements.

This techinique is basically forcing the model to gradually reason out the answer!

Impressed by the Let’s think step by step? Find out more about it at:

Use This Short Prompt to Boost ChatGPT’s Outcome

Get the Most Out of ChatGPT “Step by Step”

medium.com

Ciphers Lab is a USA-based Digital Product Studio, that provides services like Web App design & development, Mobile Application design & development, UI/UX, Chatbot development, E-commerce development, Designing & Branding, Social Media Marketing, and virtual CTO. For further information. get connected now

One-shot prompting

In some cases, it’s easier to show the model what you want rather than tell the model what you want. This is specially useful if you need an answer on an specific format or with certain accuracy.

While zero-shot learning in ChatGPT enables the model to generate responses to new tasks without any explicit training, one-shot prompting involves showing to the model a small set of examples that are similar to the target task, allowing it to quickly adapt and provide accurate responses.

🔫 This technique allows the generation of natural language text with a limited amount of input data, such as a single example or template and it can allow for the creation of predictable outputs.

For example, if a company wanted to create a chatbot to assist with customer support, they might use one-shot prompting to fine-tune the ChatGPT model on a small set of customer inquiries and responses.

As mentioned above, one-shot prompting is specially useful if you need a certain output format:

As we can see from the example above, ChatGPT adapts its output to the desired format.

Few-shot prompting

While zero-shot tricks and one-shot prompting are powerful tools already, few-shot prompting takes the concept a step further by allowing the model to quickly learn new tasks with a higher amount of data.

Few-shot prompting was studied by studied by Jason Wei and Denny Zhou et al. from Google and it can be seen as demonstrating the model how to answer with a few examples.

📊 Few-shot learning works the same as one-shot, but the number of examples given is higher, typically around a hundred. The performance of the model increases linearly with the numer of examples.

This approach is particularly useful for tasks that require more specialized or domain-specific knowledge, where the model may not have sufficient background knowledge to perform the task using zero-shot or one-shot prompting.

For example, imagine a company wants to develop a chatbot to assist with medical diagnoses. While one-shot prompting may be effective for training the model on a few common medical conditions, it may not be sufficient for more complex diagnoses. In this case, few-shot learning could be used to fine-tune the model on a small set of medical cases and diagnoses, allowing the chatbot to provide accurate and informed medical advice.

In this case, you could include the following information in your prompt:

Prompt: Q: What are the symptoms of the flu?

A: The flu is a respiratory illness caused by the influenza virus. Common symptoms include fever, cough, sore throat, runny or stuffy nose, body aches, headache, chills, and fatigue.

Q: What are the symptoms of a heart attack?

A: A heart attack occurs when blood flow to the heart muscle is blocked. Common symptoms include chest pain or discomfort, shortness of breath, lightheadedness, nausea, and pain or discomfort in the arms, back, neck, jaw or stomach.

Q: What are the symptoms of a stroke?

A: A stroke occurs when blood flow to the brain is disrupted. Common symptoms include sudden weakness or numbness in the face, arm, or leg (especially on one side of the body), confusion, trouble speaking or understanding speech, vision problems, dizziness, and severe headache.With this prompt, the chatbot can use the provided Q&A pairs as context to help diagnose medical conditions. When prompted with a symptom such as “I have a fever and a cough”, the chatbot can leverage its understanding of symptoms from the provided Q&A pairs to generate a more informed response.

Ciphers Lab is a USA-based Digital Product Studio, that provides services like Web App design & development, Mobile Application design & development, UI/UX, Chatbot development, E-commerce development, Designing & Branding, Social Media Marketing, and virtual CTO. For further information. get connected now

Advantages of Few-Shot prompting

One advantage of the few-shot example-based approach relative to the Let's think step by step technique is that you can more easily specify the format, length, and style of reasoning that you want the model to perform.

This can be be particularly helpful in cases where the model is not initially reasoning in the right way or depth.

💪🏼 Finally, if few-shot prompting is not enough for your concrete task, you can also try really fine-tunning your own ChatGPT by applying transfer learning with your desired data. Check out the following article to fine-tune ChatGPT with Python:

And that is it! Prompt engineering techniques such as zero-shot, one-shot and few-shot prompting can give you more flexibility and control when creating outputs by taking advantage of the power of models like ChatGPT.

Consider trying the techniques in this article to build more reliable, high-performing prompts, now that you know how prompt engineering can impact your outputs!

And one more fact before you go!

Maximizing ChatGPT outcomes has become so popular that we have started seeing a lot of job offerts about Promp Engineering seeking for someone knowing how to get the desired knowledge from ChatGPT.

Let me know in the comments if you want me to write about more techniques to improve reliability of the language models!

Ciphers Lab is a USA-based Digital Product Studio, that provides services like Web App design & development, Mobile Application design & development, UI/UX, Chatbot development, E-commerce development, Designing & Branding, Social Media Marketing, and virtual CTO. For further information. get connected now

No comments:

Post a Comment